Building Scalable Web Applications with PHP and Kubernetes

Learn how to deploy and manage scalable PHP web applications with Kubernetes in this step-by-step guide.

In today's digital age, building a web application that can handle lots of traffic and stay up and running even if something goes wrong is crucial for success. However, scaling a web app can be challenging, especially if your traffic patterns are unpredictable or your app needs to be available all the time. That's where Kubernetes comes in.

Kubernetes is an open-source container orchestration platform that can help you automate deployment, scaling, and management of containerized applications. In this post, we'll explore how to use Kubernetes to build a scalable web application with PHP. We'll cover everything from setting up a Kubernetes cluster to deploying and managing your PHP app in a containerized environment.

Here are the topics that we'll cover in this post:

- Introduction to Kubernetes

- Setting up a Kubernetes cluster

- Packaging your PHP app in a container for Kubernetes deployment

- Configuring your Kubernetes deployment for your PHP app

- Scaling your PHP app up and down as needed to handle traffic spikes

- Ensuring high availability and fault tolerance with service discovery and load balancing

- Monitoring and logging for performance tracking and issue identification

- Continuous integration and delivery (CI/CD)

- Conclusion

Introduction to Kubernetes: What it is and how it can help you scale your web app

Kubernetes is a powerful tool for automating the deployment, scaling, and management of containerized applications. It provides a platform for managing and orchestrating the deployment of your applications, making it easy to scale your web app up and down as needed.

At its core, Kubernetes is a system for managing containerized applications across a cluster of nodes. It takes care of the scheduling and coordination of containers, ensuring that they are running where they should be and that they have the resources they need. This makes it possible to run your web app on a large, distributed system without having to worry about the underlying infrastructure.

To get started with Kubernetes, you first need to set up a Kubernetes cluster. This involves installing Kubernetes on a set of servers or virtual machines and configuring them to work together as a single system. There are many tools available for setting up a Kubernetes cluster, including Minikube, Kind, and kubeadm.

Once you have your Kubernetes cluster up and running, you can start deploying your web app. Kubernetes uses a YAML configuration file to define how your application should be deployed and managed. Here's an example of a simple YAML file that defines a deployment for a PHP web app:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-php-app

spec:

replicas: 3

selector:

matchLabels:

app: my-php-app

template:

metadata:

labels:

app: my-php-app

spec:

containers:

- name: php

image: php:7.4-apache

ports:

- containerPort: 80This YAML file defines a deployment for a PHP web app that will run three replicas of the application. It specifies that the application should be deployed using the php:7.4-apache Docker image, which includes the PHP runtime and Apache web server. It also specifies that the application should listen on port 80.

To deploy this application to your Kubernetes cluster, you can use the kubectl apply command:

$ kubectl apply -f my-php-app.yamlThis command will deploy the application and create the necessary Kubernetes resources to run it, such as pods, services, and replica sets. You can use the kubectl get command to see the status of your deployment:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

my-php-app-84f7d8549c-bfzzl 1/1 Running 0 2m

my-php-app-84f7d8549c-ltkbm 1/1 Running 0 2m

my-php-app-84f7d8549c-xvjxg 1/1 Running 0 2m

This command shows that the deployment has created three pods, each running an instance of the PHP web app.

Setting up a Kubernetes cluster

To deploy your PHP web app on Kubernetes, you first need to set up a Kubernetes cluster. A Kubernetes cluster is a set of nodes (servers or virtual machines) that run Kubernetes and work together as a single system.

There are many tools available for setting up a Kubernetes cluster, including Minikube, Kind, and kubeadm. In this guide, we'll use kubeadm to set up a three-node Kubernetes cluster on Ubuntu Server 20.04 (My favourite Release).

Step1: Install Docker and Kubernetes

First, you need to install Docker and Kubernetes on each node in your cluster. You can use the following commands to install these packages:

docker.io in Debian-based environments, especially on a server, because it is officially supported by Debian and easier to manage, compared to docker-ce. docker.io also provides a stable version of Docker that is thoroughly tested for compatibility with Debian-based systems.Install Docker if it's not already installed:

$ sudo apt-get update

$ sudo apt-get install -y docker.io

$ sudo systemctl enable docker

$ sudo systemctl start dockerNow, it's time to install Kubernetes:

$ sudo apt-get install -y apt-transport-https curl

$ curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

$ echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

$ sudo apt-get update

$ sudo apt-get install -y kubelet kubeadm kubectl

$ sudo apt-mark hold kubelet kubeadm kubectlStep 2: Initialize the cluster

Once you have installed Docker and Kubernetes on each node, you can use kubeadm to initialize the cluster on the master node. Run the following command on the master node:

$ sudo kubeadm init --pod-network-cidr=10.244.0.0/16This command will initialize the Kubernetes control plane and create a default configuration file (/etc/kubernetes/admin.conf) that you can use to connect to the cluster.

Step 3: Join the worker nodes

Next, you need to join the worker nodes to the cluster. Run the following command on each worker node, using the kubeadm join command that was printed by the kubeadm init command:

$ sudo kubeadm join <master-node-ip>:<master-node-port> --token <token> --discovery-token-ca-cert-hash <hash>

Step 4: Configure the network

Finally, you need to configure the network for your cluster. In this guide, we'll use Flannel, a popular Kubernetes networking solution. Run the following command to install Flannel:

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

This command will create the necessary Kubernetes resources to run Flannel on your cluster.

Packaging your PHP app in a container for Kubernetes deployment

Now that you've properly set up the Kubernetes cluster, it's time to deploy a PHP application and see the result. Here's the simple PHP code that we use to deploy to the Kubernetes cluster:

<?php

echo 'Hello World! I'm running on Kubernetes! ;)';

?>You can save this code to a file named index.php.

To deploy this PHP application to your Kubernetes cluster, you can create a Docker image that contains your PHP code and run it as a Kubernetes deployment. Here's an example Dockerfile that you can use to build the Docker image:

FROM php:7.4-apache

COPY index.php /var/www/html/This Dockerfile sets up the Apache web server and copies the index.php file to the default web root directory.

To build the Docker image, run the following command:

$ docker build -t my-php-app .This will build the Docker image and tag it as my-php-app.

Now, we can deploy our PHP web app using the YAML configuration file we saw in the previous section. Create a file called my-php-app.yaml with the following content:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-php-app

spec:

replicas: 3

selector:

matchLabels:

app: my-php-app

template:

metadata:

labels:

app: my-php-app

spec:

containers:

- name: php

image: php:7.4-apache

ports:

- containerPort: 80

Then, run the following command to deploy your PHP web app:

$ kubectl apply -f my-php-app.yamlAfter successfully deploying the PHP web app on Kubernetes using the YAML file, you can access it by finding the IP address of the Kubernetes cluster and the port number that the app is running on.

To find the IP address of the Kubernetes cluster, you can use the following command:

kubectl cluster-infoThis command should output the IP address of the Kubernetes cluster:

Kubernetes master is running at https://xxx.xxx.xxx.xxx:6443

KubeDNS is running at https://xxx.xxx.xxx.xxx:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Next, you need to find the port number that the PHP web app is running on. You can do this by checking the YAML file that you used to deploy the app, specifically the spec.template.spec.containers.ports section. In our example, the app is running on port 80.

Once you have the IP address and port number, you can access the PHP web app in a web browser by entering the following URL:

http://<IP_ADDRESS>:<PORT_NUMBER>Replace <IP_ADDRESS> with the IP address of the Kubernetes cluster and <PORT_NUMBER> with the port number that the app is running on (in our example, 80).

This should display the output of the PHP web app in the web browser. 😍😍😍

Configuring your Kubernetes deployment for your PHP app

Now that we have a containerized PHP app running in our Kubernetes cluster, it's time to configure our deployment for optimal performance and scalability.

First, let's increase the number of replicas for our deployment. This can be done by modifying the replicas field in our my-php-app-deployment.yaml file:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-php-app-deployment

spec:

replicas: 3 # Change this to the desired number of replicas

selector:

matchLabels:

app: my-php-app

template:

metadata:

labels:

app: my-php-app

spec:

containers:

- name: my-php-app

image: my-php-app:v1

ports:

- containerPort: 80

Save the changes and apply the updated configuration:

$ kubectl apply -f my-php-app-deployment.yamlNext, we can configure our deployment to use a horizontal pod autoscaler (HPA) to automatically adjust the number of replicas based on CPU usage. First, we need to create a metric server for our cluster:

$ kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yamlThis will create an HPA that scales the deployment between 1 and 10 replicas based on CPU usage.

Finally, we can configure our deployment to use a load balancer service to distribute traffic across our replicas. This can be done by adding the following YAML to our my-php-app-service.yaml file:

apiVersion: v1

kind: Service

metadata:

name: my-php-app-service

spec:

type: LoadBalancer

selector:

app: my-php-app

ports:

- name: http

port: 80

targetPort: 80

Save the changes and apply the updated configuration:

$ kubectl apply -f my-php-app-service.yaml

This will create a load balancer service that routes traffic to our deployment replicas.

With these configurations in place, our PHP app is now fully optimized for scalability and performance in a Kubernetes environment.

Scaling your PHP app up and down as needed to handle traffic spikes

Scaling your PHP app up and down as needed to handle traffic spikes is an important aspect of running a successful web application. Fortunately, Kubernetes makes it easy to scale your application horizontally by increasing or decreasing the number of replicas of your pods.

To scale up your PHP app, you can simply run the following command in your terminal:

$ kubectl scale deployment my-php-app-deployment --replicas=3

This command will increase the number of replicas of your PHP app to 3. Similarly, if you need to scale down your PHP app, you can use the same command with a lower number of replicas, like this:

kubectl scale deployment my-php-app-deployment --replicas=1

This will decrease the number of replicas of your PHP app to 1.

You can also monitor the status of your pods and replicas using the following command:

$ kubectl get pods

$ kubectl get replicasets

This will show you the current status of your pods and replicasets. If you need to further increase the capacity of your PHP app, you can also consider using Kubernetes autoscaling feature.

Ensuring high availability and fault tolerance with service discovery and load balancing

Ensuring high availability and fault tolerance with service discovery and load balancing is crucial for running a reliable PHP application. In Kubernetes, you can achieve this by using a combination of services and load balancers.

When you create a deployment for your PHP app, Kubernetes automatically assigns it a unique IP address. However, this IP address is subject to change if the pod is rescheduled or restarted. To avoid this, you can create a Kubernetes service that acts as a stable endpoint for your PHP app.

Take a look at the yaml file we wrote for our PHP app. The service exposes port 80 on a stable IP address that can be used to access your PHP app. Additionally, the type: LoadBalancer creates a Kubernetes load balancer that distributes traffic evenly across multiple replicas of your PHP app.

To ensure high availability and fault tolerance, you can also configure Kubernetes to automatically restart your PHP app if it crashes or becomes unresponsive. This can be done by setting the restartPolicy field in your deployment YAML file to Always.

Monitoring and logging for performance tracking and issue identification

Monitoring and logging are essential components in any production environment, as they help track performance metrics, identify potential issues, and troubleshoot problems as they arise. Kubernetes provides several tools that enable you to monitor your PHP app and collect relevant data.

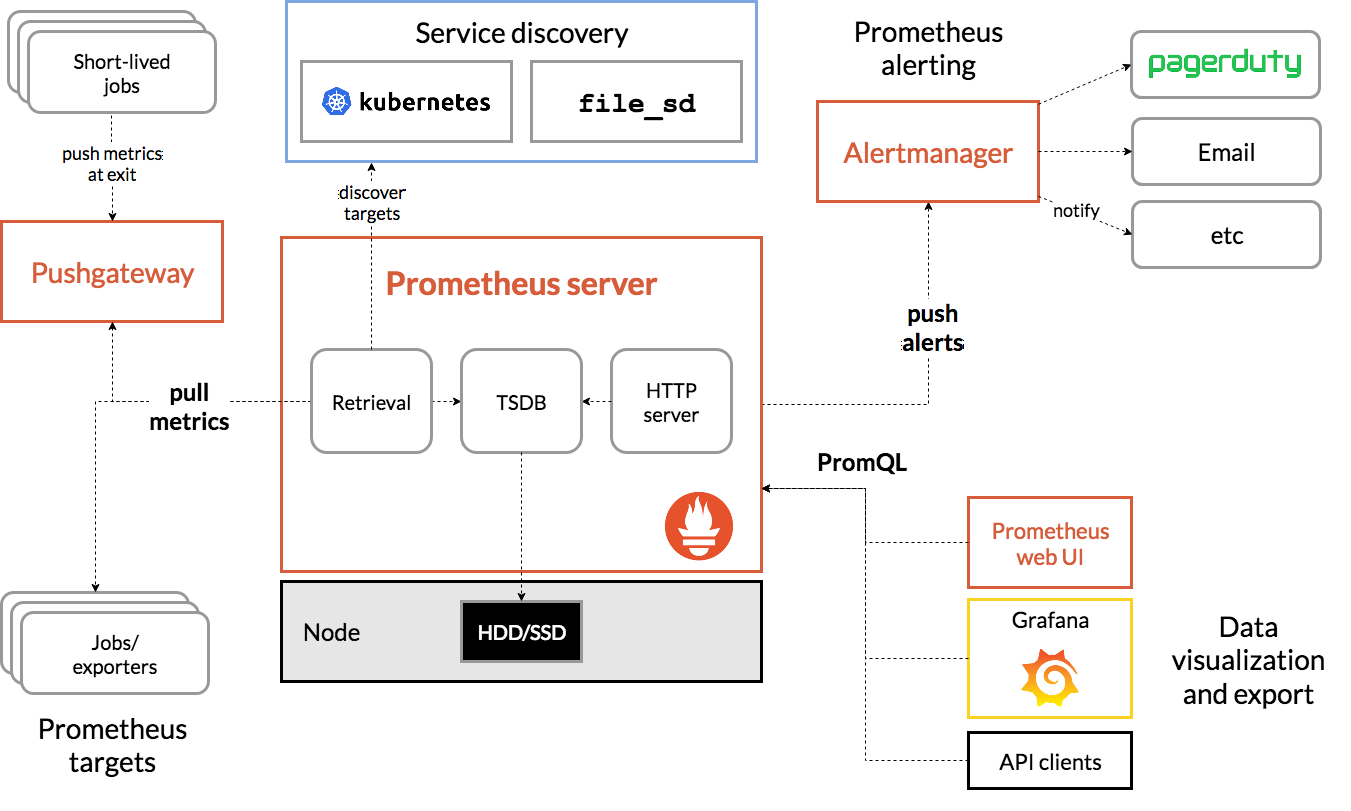

One such tool is Prometheus, an open-source monitoring system that allows you to collect and store metrics from different services and applications. Prometheus is particularly useful in a Kubernetes environment, as it can automatically discover and monitor services running on a Kubernetes cluster.

This diagram illustrates the architecture of Prometheus and some of its ecosystem components:

To set up Prometheus for your PHP app, you will need to create a Prometheus deployment and service, configure the Prometheus server to scrape metrics from your PHP app, and create a dashboard to view the collected metrics. Here's an example YAML file that creates a Prometheus deployment and service:

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus-deployment

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- name: prometheus

image: prom/prometheus:v2.43.0

args:

- "--config.file=/etc/prometheus/prometheus.yml"

ports:

- containerPort: 9090

volumeMounts:

- name: prometheus-config

mountPath: /etc/prometheus/

volumes:

- name: prometheus-config

configMap:

name: prometheus-config

---

apiVersion: v1

kind: Service

metadata:

name: prometheus-service

spec:

selector:

app: prometheus

type: ClusterIP

ports:

- name: web

port: 9090

targetPort: 9090

Once you have deployed Prometheus, you can configure it to scrape metrics from your PHP app by adding a ServiceMonitor resource. Here's an example YAML file that creates a ServiceMonitor resource for a PHP app:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: php-app

spec:

selector:

matchLabels:

app: php-app

endpoints:

- port: web

path: /metrics

This ServiceMonitor resource tells Prometheus to scrape metrics from a service with the label app: php-app and the /metrics endpoint. You can modify the selector field to match the labels of your PHP app's deployment.

Once you have set up Prometheus to monitor your PHP app, you can use Grafana to visualize the collected metrics and create custom dashboards. Grafana is an open-source tool that allows you to create and share dashboards for different data sources, including Prometheus.

In addition to monitoring, logging is also crucial for tracking the performance of your PHP app and identifying potential issues. Kubernetes provides several logging solutions, including Fluentd and Elasticsearch, that allow you to collect, store, and analyze logs from different containers and applications.

To set up logging for your PHP app, you will need to create a logging deployment and service and configure the logging agent to collect logs from your PHP app's containers. Here's an example YAML file that creates a logging deployment and service:

apiVersion: apps/v1

kind: Deployment

metadata:

name: logging-deployment

spec:

replicas: 1

selector:

matchLabels:

app: logging

template:

metadata:

labels:

app: logging

spec:

containers:

- name: fluentd

image: fluent/fluentd:v1.13-1

env:

- name: FLUENTD

Continuous integration and delivery (CI/CD)

Continuous integration and delivery (CI/CD) is a crucial aspect of modern software development. With CI/CD, developers can quickly and automatically test and deploy code changes, enabling faster and more reliable releases. In a Kubernetes environment, CI/CD workflows can be easily implemented using popular tools such as Jenkins, GitLab CI/CD, and Travis CI.

To set up a CI/CD pipeline for your PHP app in Kubernetes, you will need to integrate your chosen CI/CD tool with your Kubernetes cluster. This can typically be achieved by configuring a Kubernetes cluster as a deployment target in your CI/CD tool's settings. Once this is done, you can create a pipeline that builds and tests your PHP app, packages it in a container, and deploys it to your Kubernetes cluster.

A typical CI/CD pipeline for a PHP app in Kubernetes might include the following steps:

- Check out code from your version control system (such as Git).

- Build your PHP app and package it in a container.

- Run automated tests to ensure the app is functioning as expected.

- Push the container to a container registry, such as Docker Hub or Google Container Registry.

- Deploy the new container to your Kubernetes cluster using kubectl or a Kubernetes API client.

By automating this process, you can ensure that changes to your code are thoroughly tested and deployed quickly and reliably, reducing the risk of errors and improving the overall quality of your software. Additionally, CI/CD pipelines can provide valuable insights into the performance and stability of your PHP app, allowing you to quickly identify and resolve issues as they arise.

Conclusion

In this post, we learned a lot about deploying and managing scalable PHP web applications with Kubernetes. First, we introduced Kubernetes and its benefits for scaling web applications. Then, we showed you how to set up a Kubernetes cluster for your PHP app, package your app in a container, configure your deployment, scale your app, ensure high availability, and monitor and log performance for issue identification. We also emphasized the importance of implementing a CI/CD pipeline to streamline the deployment process and maintain consistent quality across all releases. By following these steps, you now have a solid foundation for deploying and managing scalable PHP web applications with Kubernetes. Keep learning and refining your processes to achieve the best possible outcomes for your organization.